- 0

- 0

Large language models (LLMs) are impressive AI tools that can understand and generate human language. However, they can be unreliable when making predictions, especially in high-stakes situations. This article explores selective prediction, a technique that helps LLMs assess their own confidence and only make predictions when they are sure.

The Problem: LLMs Lack Confidence

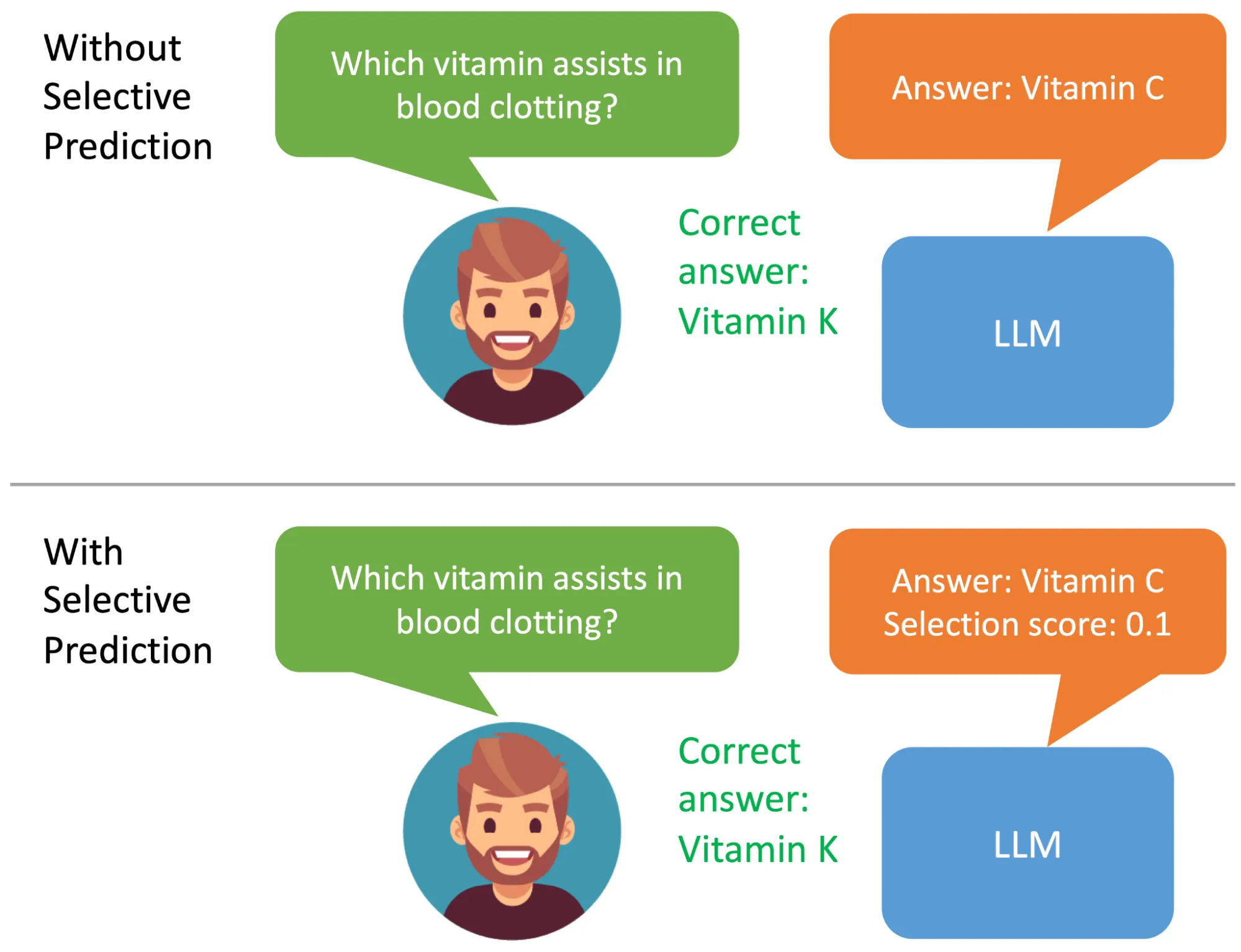

Imagine a student who can answer questions but doesn’t know if their answers are correct. This is similar to how traditional LLMs work. They can provide answers, but they can’t tell you how trustworthy those answers are.

Selective Prediction to the Rescue

Selective prediction equips LLMs with the ability to judge their own answers. Here’s how it works:

- Training: LLMs are trained on specific tasks and question-answering datasets.

- Answer Generation: The LLM generates multiple possible answers for each question.

- Self-Evaluation: The LLM analyzes the generated answers and assigns a “confidence score” to each one. This score indicates how likely the answer is to be correct.

Benefits of Selective Prediction

- Improved Reliability: With confidence scores, users can better understand how trustworthy the LLM’s answers are.

- Reduced Errors: By only making predictions when they are confident, LLMs can avoid providing incorrect information.

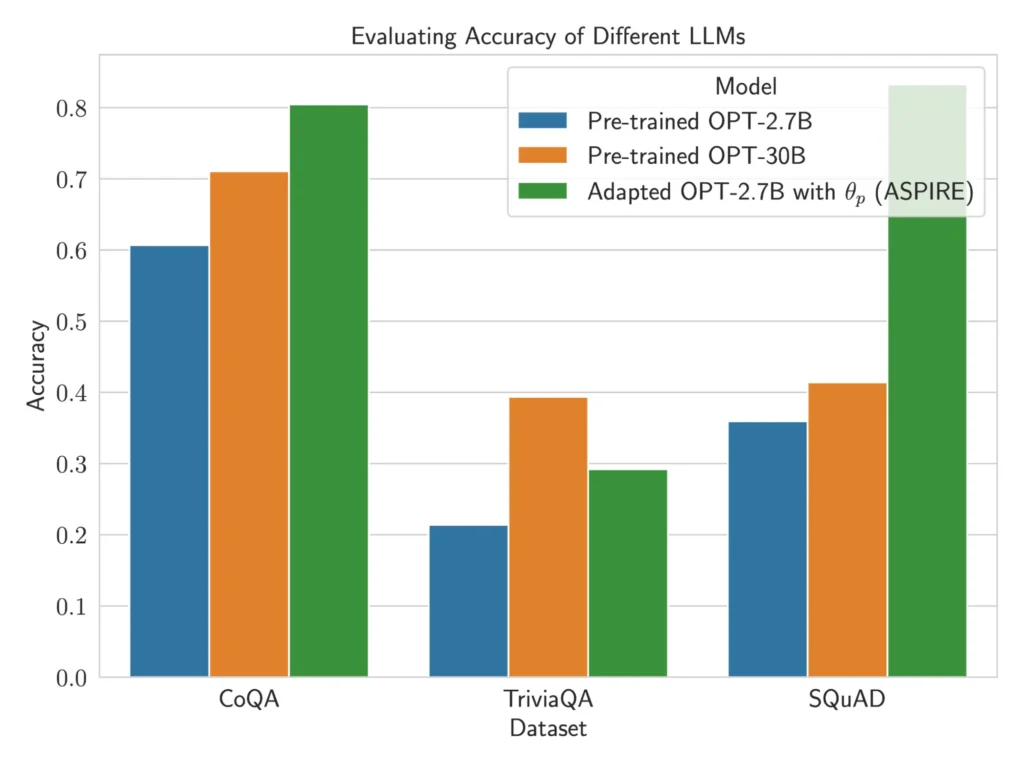

- Smaller Models, Better Performance: Selective prediction can enhance the performance of smaller LLMs, making them potentially more reliable than larger models in some situations.

ASPIRE: A Framework for Selective Prediction

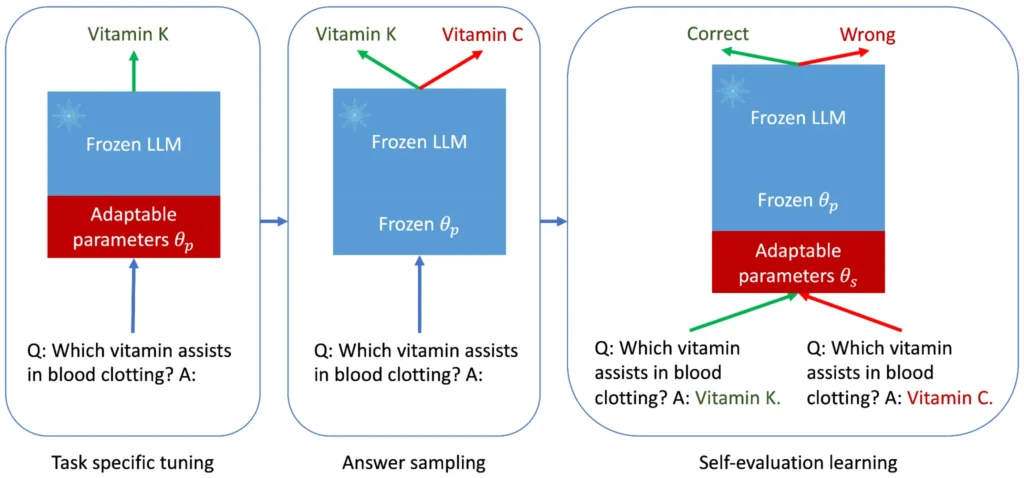

Researchers at Google AI developed ASPIRE, a framework specifically designed to improve selective prediction in LLMs. ASPIRE uses a three-stage process:

- Task-Specific Tuning: Adapts the LLM to a specific task, improving its answer accuracy.

- Answer Sampling: Generates multiple possible answers for each question.

- Self-Evaluation Learning: Trains the LLM to evaluate its own answers and assign confidence scores.

The Future of Selective Prediction

Selective prediction is a significant step towards making LLMs more reliable and trustworthy. This technology has the potential to revolutionize how we interact with AI and unlock its full potential in various applications. Researchers are excited to see how this approach will inspire the development of the next generation of LLMs.

Leave a Reply