- 0

- 0

Leveraging the full potential of LLMs requires choosing the right technique between retrieval-augmented generation (RAG) and fine-tuning.

Let’s examines when to use RAG versus fine-tuning for LLMs, smaller models, and pre-trained models. We’ll cover:

- Brief background on LLMs and RAG

- RAG advantages over fine-tuning LLMs

- When to fine-tune vs RAG for different model sizes

- Using RAG and fine-tuning for pre-trained models

- Financial services examples for RAG and fine-tuning

- Practical considerations and recommendations

Background on LLMs and RAG

Large language models utilize a technique called pre-training on massive text datasets like the internet, code, social media and books. This allows them to generate text, answer questions, translate languages, and more without any task-specific data. However, their knowledge is still limited.

Retrieval-augmented generation enhances LLMs by retrieving relevant knowledge from a database as context before generating text. For example, a financial advisor LLM could retrieve a client’s investment history and profile before suggesting financial recommendations.

Retrieval-augmentation combines the benefits of LLMs ability to understand language with the relevant knowledge in a domain-specific database. This makes RAG systems more knowledgeable, consistent, and safe compared to vanilla LLMs.

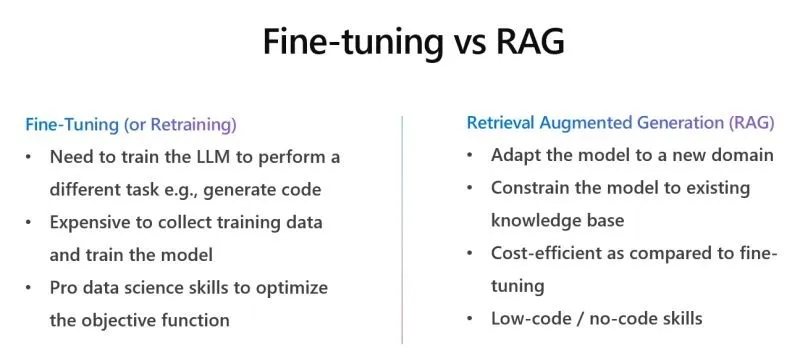

RAG Advantages Over Fine-tuning LLMs

Fine-tuning adapts a pre-trained LLM to a specific task by training it on domain-specific data. For example, a pre-trained LLM can be fine-tuned on financial documents to improve its finance knowledge.

However, fine-tuning has several downsides compared to retrieval-augmentation:

- Forgetting: Fine-tuned models often forget or lose capabilities from pre-training. For example, a finance fine-tuned LLM may no longer handle general conversational tasks well.

- Training data dependence: Performance is entirely reliant on the quantity and quality of available training data. Collecting high-quality data is expensive.

- Lacks external knowledge: The model only knows what’s in its training data, and lacks real-world knowledge.

- Not customizable: Changes to the fine-tuned model require retraining which is expensive.

In contrast, RAG systems:

- Retain capabilities from pre-training since the LLM itself is not modified.

- Augment the LLM with customizable external knowledge sources like databases.

- Allow changing knowledge sources without retraining the LLM.

- Have lower data requirements since the LLM is not retrained.

Therefore, RAG systems often achieve better performance than fine-tuning while retaining more capabilities of the original LLM.

When to Fine-Tune vs RAG for Different Model Sizes

The choice between fine-tuning and RAG depends on the model size:

Large Language Models

For massive models like GPT-4 with Trillions of parameters, RAG is generally preferable over fine-tuning:

- Retains pre-training capabilities: Fine-tuning risks forgetting abilities like conversing, translating, analyzing, etc. that require a model of GPT-4’s scale.

- Utilizes external knowledge: GPT-4/Llama-2 lacks world knowledge, whereas RAG augments it with external databases.

- Avoids catastrophic forgetting: Where fine-tuning may damage LLMs versatile abilities.

- Flexible knowledge sources: RAG knowledge sources can be changed without expensive retraining.

Unless the fine-tuned task is very similar to pre-training or requires memorization, RAG suits LLMs better.

Medium Language Models

For mid-size models like Llama 2 7B, Falcon 7B, Alpaca 7B with hundreds of millions of parameters, both RAG and fine-tuning are viable options:

- Fine-tuning may be preferred for objectives relying heavily on memorization like question answering over documents.

- RAG can be advantageous for domain-specific generation or classification tasks by retrieving relevant knowledge.

Evaluate whether retaining the full general knowledge of a mid-size LLM matters for your use case when choosing.

Small Language Models

For smaller custom models like Zephyr, Phi2 and Orca with thousands to millions of parameters, fine-tuning is often more suitable than RAG:

- Small models already lack the general capabilities of larger models.

- Training them on domain-specific data via fine-tuning imbues knowledge directly.

- Little risk of catastrophic forgetting since they have minimal pre-training.

- Easy to retrain small models with new data when needed.

Unless you specifically need to retain breadth of knowledge, fine-tuning small models is preferable over RAG.

Using RAG and Fine-tuning for Pre-trained Models

Both RAG and fine-tuning are applicable strategies for adapting pre-trained models — whether LLMs like BERT, ELMo, RoBERTa or smaller custom models.

RAG for Pre-Trained Models

Applying RAG to pre-trained models is effective when:

- Leveraging general knowledge — The model has strong underlying capabilities you wish to retain like conversing, analyzing, etc.

- Minimizing forgetting — Further training risks damaging versatile general abilities.

- Utilizing external knowledge — Augmenting with retrieved domain-specific knowledge is valuable.

- Flexible Knowledge — The knowledge needs change often, so swappable databases are preferred over retraining.

For example, RAG suits large conversational models to avoid forgetting general chat abilities while augmenting them with domain knowledge.

Fine-tuning Pre-Trained Models

Fine-tuning is advantageous for pre-trained models when:

- Specialized focus — The end-task relies heavily on specialized knowledge vs general abilities.

- Memorization — Memorizing domain-specific data like customer profiles is crucial.

- Static knowledge — The knowledge requirements are relatively fixed, reducing the need for swappable databases.

- Small model size — For smaller models, fine-tuning can directly infuse knowledge without much risk of catastrophic forgetting.

For instance, fine-tuning is effective for training customer service chatbots with static company policies and responses.

Examples of Applying RAG and Fine-Tuning

Let’s consider Financial services use cases differ in their general knowledge vs domain-specific knowledge needs — impacting the RAG vs fine-tuning choice.

Investment Management and Advice

Providing custom investment management and financial advice requires both strong general conversational ability and domain-specific knowledge.

RAG suits these applications well by:

- Augmenting a conversational model like GPT-4 with client profiles and investment data for personalized advice.

- Retrieving market data, past performance, and research to generate informed investment strategies.

- Updating databases frequently with new market information without retraining the underlying model.

Fine-tuning risks damaging the general conversational capabilities critical for communicating effectively with clients.

Insurance Claim Processing

Processing insurance claims heavily involves analyzing documents, extracting key information, validating claims against policies, and generating claim reports.

Fine-tuning a mid-size model like Llama 2 7B, Falcon 7B on past claims and policies fits this use case:

- Memorizing insurance policies is crucial — better handled via fine-tuning than an external database.

- Static bodies of policies and claims data versus rapidly changing knowledge.

- Domain-specific tasks focused on forms analysis versus general conversational ability.

Customer Service Chatbots

Chatbots handling customer service for financial services often require both broad conversational competence and knowledge of company-specific FAQs, policies, and scripts:

- A combined approach using RAG for general chitchat and fine-tuning for company knowledge works well.

- Fine-tune a small chatbot model on internal datasets to directly embed company knowledge.

- Augment via RAG by retrieving appropriate FAQs, account details, and scripts when needed.

This balances broad conversational ability with deep company-specific knowledge.

Anti-Money Laundering Text Analysis

Analyzing financial documents and client data to detect money laundering and financial crime leans heavily on learned patterns and domain terminology:

- Fine-tuning a text classification model on AML data makes sense given the specialized focus.

- Static knowledge of laws, regulations, patterns of suspicious activity.

- General conversational skills are less important.

Directly training a model via fine-tuning suits this specialized text analysis task better than RAG.

Client Profile Keyword Extraction

Extracting relevant keywords like assets, financial goals, risk tolerance from client documents and correspondence requires basic language understanding without much domain knowledge:

- An off-the-shelf LLM serves well here without much tuning needed.

- No need to memorize domain terminology or patterns.

- General language capabilities provide enough signal.

- RAG’s advantages are minimal for this generalized task.

Using an off-the-shelf model as-is often works better than extensive fine-tuning or RAG.

Key Practical Considerations for RAG and Fine-Tuning

- Access to LLMs — RAG requires access to large pretrained models. Developing smaller custom models in-house is more accessible.

- Data availability — Fine-tuning requires sizable domain-specific datasets. RAG relies more on external knowledge sources.

- Knowledge flexibility — RAG supports frequently updated knowledge without retraining. Fine-tuning requires periodic retraining.

- Training infrastructure — RAG primarily means selecting and updating data sources. Fine-tuning needs GPUs for efficient training.

- Inference speed — RAG retrieval steps add inference latency. Fine-tuned models are self-contained so can be faster.

- General capabilities — RAG preserves the versatility of large LLMs. Fine-tuning trades generality for specialization.

- Hybrids — Many applications use RAG for some capabilities and fine-tuning for others. Choose the best method per task.

Advanced perspectives comparing RAG and Fine-tuning:

- Hybrid RAG-tuning approaches — Rather than a binary choice, hybrid techniques aim to combine the strengths of both methods. For example, T0 partially fine-tunes certain layers of an LLM while leaving other layers frozen to retain capabilities.

- Multi-stage modeling — A promising approach involves first retrieving with RAG, then using the retrieved context to condition a fine-tuned model. This allows flexibly incorporating external knowledge while specializing parts of the model.

- Dynamic RAG vs static fine-tuning — RAG allows updating knowledge sources dynamically without retraining, while fine-tuning produces static specialized models. However, techniques like dynamic evaluation and continual learning aim to also evolve fine-tuned models online.

- Scaling laws — Fine-tuning often hits diminishing returns in performance with model size, while scaling RAG models may enable more consistent improvements as external knowledge is incorporated. This could make RAG more effective for developing large models.

- Hyper-personalization — RAG facilitates personalization via different knowledge per user without retraining models. Fine-tuning requires training each user’s model from scratch, limiting personalization. However, approaches like model-agnostic meta-learning for fine-tuning may alleviate this.

- Catastrophic forgetting mitigation — RAG inherently avoids forgetting pre-trained capabilities. But new fine-tuning techniques like elastic weight consolidation, synapse intelligence, and dropout scheduling aim to mitigate catastrophic forgetting as well.

- On-device deployment — Fine-tuned models are fully self-contained, allowing low-latency on-device deployment. Incorporating knowledge retrieval makes full RAG deployment on-device more challenging. However, approximations like distillation of retrieved knowledge into model parameters may enable deploying RAG systems on-device.

- Theory vs empirical — Theoretically, RAG should improve sample efficiency over fine-tuning by incorporating human knowledge. But empirical results have been mixed, often showing comparable performance. Further research is needed to realize RAG’s theoretical gains.

Exploring their combination is a promising path to maximizing the advantages of each approach.

Key Recommendations

Based on our analysis, here are some best practice:

- For client-facing applications like advisors and chatbots, prioritize retaining conversational capabilities with RAG over fine-tuning. Use fine-tuning sparingly for essential company-specific knowledge.

- For document and text analysis tasks, specialized fine-tuned models often outperform RAG. But consider RAG for rapidly evolving analysis like investments.

- Maintain access to large, general LLMs even if building custom models — RAG enables benefiting from their general competence.

- Audit fine-tuned models regularly to check for deviations from desired behavior compared to initial training data.

- Evaluate ensemble approaches combining specialized fine-tuned models with general conversational RAG models.

- Carefully select external knowledge sources for RAG — relevance, accuracy and ethics determine overall system performance.

- For client data and documents, retrieve only the essential portions rather than full text to balance relevance and privacy.

Conclusion

Choosing the right techniques like RAG or fine-tuning is crucial to maximize the performance of LLMs, smaller models, and pre-trained systems for financial services. The considerations are complex — depend on factors like model size, use case focus, infrastructure constraints, changing knowledge needs, and more. Blending RAG and fine-tuning is often optimal to achieve both conversational competence and domain expertise. As LLMs and augmented AI advance, RAG will become even more prominent — though still complemented by fine-tuning where it fits best. Companies that understand the nuances between these techniques will gain an AI advantage customized to their unique needs.

Leave a Reply